Blogs & News

Considering the Future of AI in Real World Data

In today’s data ecosystem where technology moves at mindboggling speeds, there is always a seasonal buzzword or phrase that seems to come up with notable frequency. Some of those hot topics stick around and get woven into the fabric of everyday operation, like “electronic health records” did in hospitals. But others fizzle out or get considerably less prominent over time as people realize where they fit in the big picture.

The phrase I’m hearing a lot these days in health data science conversations is artificial intelligence (AI), which has recently overtaken the phrase machine learning (ML) in popularity. Both of those things are here to stay, but how they will affect the everyday lives of healthcare professionals in various settings beyond data science is an open question. Let’s unpack some of the discussions of AI in healthcare that I am hearing.

Definitions

I hear AI and ML used interchangeably so often that must remind myself they are different things. AI aims to recreate human intelligence by employing many tools. ML is one of those tools, along with others like natural language processing (NLP) and neural networks or other deep learning models. I simplify ML algorithms as “predictive models on steroids” for people who only want a superficial explanation. Generative AI includes things like DALL-E, which can be asked to generate novel images based on text descriptions of a scene. You can try your hand at DALL-E from OpenAI for a generative AI example.

Applications in Healthcare Settings

Some aspects of artificial intelligence are already affecting healthcare, from algorithms to assist radiologists in tumor detection to early-stage drug development tools for identifying novel targets. But for this post, I want to focus on AI applications and real-world data (RWD). While RWD may come from social media, patient-collected registries, and more, my experience is that most people are specifically talking about data from electronic health records when they mention RWD, so let’s explore that.

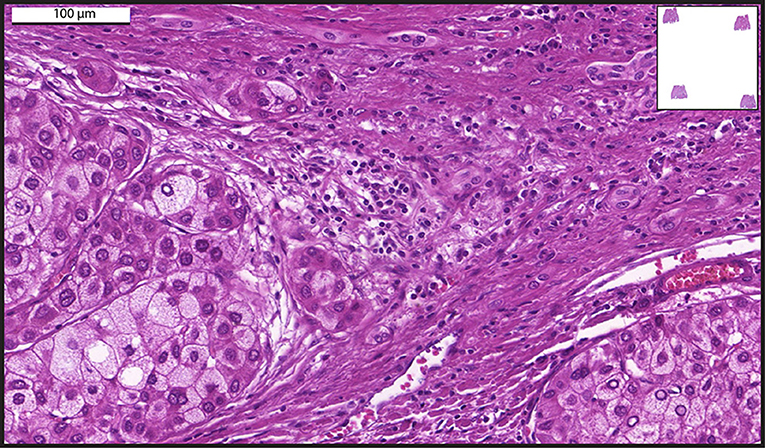

What’s possible today with existing tools and data sets within hospital systems include development of ML tools to detect tumors in images. I’ve personally benefitted from algorithm-assisted MRI interpretation, but there is room to keep working on all kinds of image classes and subjects. Training data sets require both images in a consistent format as well as associated annotation. For example, I would harvest many CT images of the liver along with many text files indicating whether the patient did or did not have liver cancer at the time of imaging. Quantum Leap Healthcare Collaborative are already sharing a rich dataset of deidentified whole slide images from biopsy along with cancer metadata for corresponding patients, and they’re inviting outside groups to train models.

An example of whole slide imaging.

Because health records are often embedded with free text from visit summaries to physician notes, there is lots of buzz around natural language processing to elucidate patterns and develop standardized patient profiles. My experience with gathering RWD data from hospitals included challenges with simply identifying a diagnosis. Sometimes a diagnosis code comes from an ICD Code if the provider enters it, or a SNOMED CT code, both of which are maintained as standard and interoperable systems of classification. CPT codes can be more reliably expected in the USA, as they’re tied to billing for procedures, but they don’t always include comorbidities if the patient visit is related to something else. Sometimes physicians note a diagnosis after a visit and just reference it as text, possibly using various forms of a disease or condition name, spelling, or abbreviation. Combinations of NLP and ML models can help sift through large amounts of codes and text to classify a patient more consistently as having or not having a condition. Or at least that is the goal.

Generative AI comes into discussion these days with respect to its potential to interact with a clinician and provide background information about disease progression, or to help with suggested treatment options. Assuming the most qualified physician at their well-rested, undistracted peak is seeing me in the emergency room, I still shudder to think about the deluge of text and notes and screens and tabs that are provided to that physician and how easy it would be to miss a relevant note. I once woke from emergency surgery with a broad-spectrum antibiotic for possible blood infection or even sepsis. I had to argue and explain that I don’t have a spleen and my white blood cell count is often shockingly high when I am stressed before I could convince that attending to discontinue antibiotics. Imagine if generative AI existed to sift through my records over years and tell that care team that they should note asplenia and rule out infection if unaccompanied by fever and other symptoms?

Current Challenges and Obstacles

Just picking up on my dream of AI that could not just create a dashboard of patient features, but curate the variables of importance based on individual patient profiles – this functionality is hampered by current obstacles. I had not been seen at that hospital since my spleen was removed. There is no consistent interoperability between hospital systems. If I see a new doctor, that office needs to request copies of my records (that I tell them exist!) and those records may be appended as PDF’s or other forms. Some hospital systems do share common patient records – I’ve seen it as a patient – but they may not store data in the same fields or with the same standards. A recent Nature Medicine article walks through this reality more completely than I will here.

Once we get past hospitals’ lack of interoperability, we must face a lack of longitudinality in RWD records. Electronic health records from hospitals are a snapshot of a patient healing from surgery or an acute illness. That patient will go on to see their primary care physician, various pharmacies, maybe pop into a standalone clinic for influenza treatment, get mammograms at an unrelated community radiology center, or move to a new city and follow up with completely new providers. All of this makes it extremely challenging to get a complete picture of a patient’s environmental exposures, disease progression, treatments, and outcomes.

Another obvious, and for me, the most ominous obstacle to generative AI in healthcare is the potential to increase an already troubling bias in the way we treat peoples of various genders, races, ethnicities, and socioeconomic backgrounds. When I read about that creepy chatbot interaction in the New York Times, I thought about how the potential for harm is so much greater if we unleash biased AI in treatment settings. When we think of just basic realities like certain people being reluctant to see doctors at all, or the dearth of quality data from low-income settings with underfunded hospitals, it becomes easy to catastrophize. All of us will have to work hard to overcome interoperability and create complete patient records over time, but that can be achieved whether technology is biased or not. Representing people in an unbiased way is likely impossible if we simply rely on people who show up in hospitals and assume they were all diagnosed and treated equally.

Still, the future improvement of health and outcomes demands us to face these challenges with open eyes. I don’t believe any of us can wait for all those problems to be solved before we dive in and work on developing tools to improve human health. As the dubiously-attributed Greek and Chinese mixed proverbs go, “The best time to plant a tree was 20 years ago, but Society grows great when old men plant trees whose shade they know they shall never sit in.”

Or something like that.

February 9, 2024

Amanda Borens

Amanda (M.S.) joined Aridhia in 2022 and serves as Chief Data Officer. She is a data science leader with 25+ years’ experience across public and private sectors, including academia, healthcare, and biotech. Amanda has a unique breadth of life science data curation and analysis experience, having led technical teams in all phases of the FDA-regulated medical software development lifecycle for customers on three continents as well as in medical device development where her team achieved FDA clearance and CE Mark from EMA. This breadth of experience helps her provide innovative data solutions to solve scientific problems while respecting global regulatory requirements.