Blogs & News

Federated Node AI: A Prototype for Prompt-Based Data Interaction

We’ve written extensively about the development of the Federated Node over the past 18 months, covering its origins in the PHEMS project, its V1.0 release and its integration with the Aridhia DRE.

The FN is primarily being developed to meet the PHEMS use cases around clinical benchmarking and federated learning, though we expect this functionality will have wider applicability outside of the PHEMS consortium. That work is ongoing, and we will likely provide an update in the near future on the development of the PHEMS federated learning network.

However, the purpose of this article is not to provide a further update on the main development stream of the Federated Node, but instead details some experimental work we carried out over the summer integrating the FN with a deployed Small Language Model (SLM) to perform federated analysis. It covers:

- Why? The Potential benefits of AI

- What? Our success criteria and the limitations of using SLM for data analysis

- How? An overview of the Federated Node AI

- Next steps

Why? The potential benefits of AI

All established data federation frameworks require users to have a degree of familiarity with and competency in at least one coding language. Clearly this presents a significant barrier to entry to users with no coding experience, and a significant impediment to good analysis for novice users with limited coding skills.

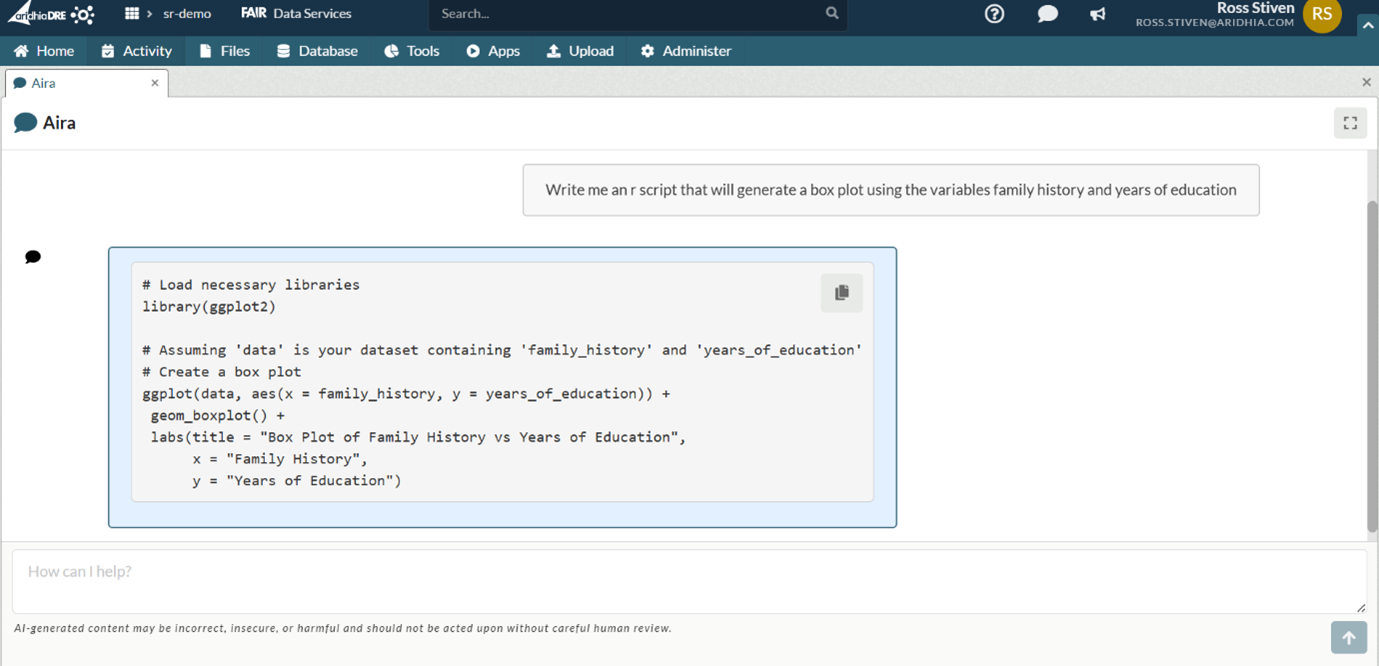

Despite their current known limitations, one task that SLMs can perform well is writing small amounts of code. The Aridhia DRE now has the AIRA framework, which allows users to host offline LLMs or SLMs in their hub. One of the benefits of this is that an analyst in the workspace can use the offline model to quickly generate analytical code using natural language prompts:

Given the relative reliability that models display in this task, this is a significant benefit even to experienced coders.

As noted above, federated analysis requires the user to have some coding ability, therefore using an LLM/SLM as an aide to federated analysis makes sense for both novice and expert users. We certainly see this as a likely future area of development, creating a workflow that allows analysts to quickly generate and check code for federated analysis, and then submit it to be approved and run against remote data sources. Our experiment with the Federated Node and an SLM takes this one step further.

What? Our success criteria and the limitations of using SLM for data analysis

The question we set ourselves was, could a Federated Node be used as a mechanism for interacting with a remotely deployed SLM, and could this interaction be used to perform analysis on data hosted with the language model.

Our success criteria were focused entirely on the success of the FN as a mechanism for interacting with a deployed language model, the potential issues with reliability of SLMs, and potential security challenges they present are well documented. Therefore, the quality of the model’s analysis was not considered a key factor in the success or failure of the experiment.

Our success criteria were:

- Can a user submit a natural language prompt to the Federated Node?

- Can the Federated Node pass the prompt to the deployed SLM?

- Upon receiving the prompt does the SLM perform the requested task?

- On task completion are the results returned to the user’s workspace?

As the video below demonstrates we were successful on all counts:

How? An overview of the Federated Node AI

The Federated Node AI is available from the Aridhia Open Source organisation on GitHub, and can be found here.

It introduces an additional endpoint /ask for submitting prompts to the deployed SLM, it also retains the last 10 interactions between the users and the SLM providing context when the user wants the SLM to iterate its analysis. Otherwise the FN AI retains the design of the standard Federated Node.

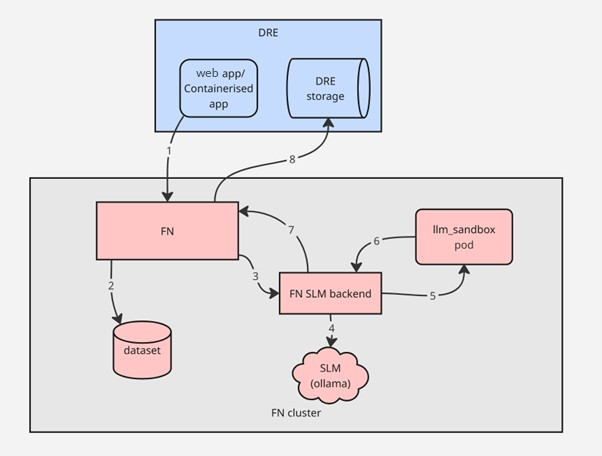

The diagram below provides a high-level overview of the FN AI workflow:

1) App from the DRE sends a prompt and dataset to analyse to the FN

2) The FN fetches the dataset and creates a csv

3) After checking for user authorisation to send natural language queries, the FN sends the csv with the prompt to the FN SLM backend as a background task to avoid deadlocking its API

4) The SLM backend prepares the prompt and query history (if any) and sends it to Ollama

5) Ollama returns a structured response with the generated code. SLM backend uses llm_sandbox to execute the code generated with the csv data.

6) Once the llm_sandbox pod is done, the results for a fixed folder are packaged with the script

7) The results are sent back to the FN

8) Results are then redirected to the DRE inbox

For our experiment Ollama was deployed with the Gemma 3:4b SLM

Next Steps

At present it seems likely that federated access will become increasingly more common as a means of making sensitive data available for analysis, and it seems similarly likely that LLMs and SLMs will be increasingly used when performing data analysis. Given these converging trends, using the Federated Node as a mechanism for interacting with an SLM feels like a timely experiment.

However, that is exactly what it is: an experiment, not the delivery of a completed product.

As noted above the issues around LLM security and reliability, particularly with regards to mathematical reasoning, are well established. This is not to mention the costs associated with running these models in the data owners infrastructure. Our underlying assumption is that these problems will be resolved by two developments:

- Smaller, more specialised, and more efficient language models.

- The emergence of mature workflow patterns, including security and output checks, for the use of language models in data analysis to prevent data exfiltration through prompt engineering.

In the DRE we will continue to develop the AIRA framework, working with our customers to establish how and where an LLM or SLM can be integrated with their analytical workflow.

With regards to data federation there are two possible avenues of exploration:

- Using the FN to call a model deployed with AIRA inside a workspace, and initiating an analytical task on data held in the workspace. Results are then released to the user following appropriate checks.

- Using an AIRA SLM as an orchestrator which initiates tasks in one or more deployed FNs, each of which is associated with its own remotely deployed SLM. The results of the remote analysis are then aggregated in the users workspace.

If you would like to know more about federation work in general, or the FN AI in particular, please get in touch.

September 30, 2025

Ross Stiven

Ross joined the Aridhia Product Team in January 2022. He is the Product Owner for FAIR Data Services, and Aridhia's open source federation project. He works with our customers to understand their needs, and with our Development Team to introduce new features and improve our products. Outside of work, he likes to go hill walking and is slowly working his way through Scotland's Munros.