Blogs & News

[Im]patient Data Scientist and Oncology Real-World Evidence

I was honored to be included at the Oncology Real-World Evidence (RWE) Workshop, hosted in Washington, D.C. last month by Friends of Cancer Research, Reagan-Udall Foundation for the FDA, Aetion, and Duke-Margolis Center for Health Policy, who also provided the host facilities. The release of the video recording of the day’s workshop inspires me to summarize some of the key points and inspiring thoughts.

• There is tremendous untapped potential in real-world data (RWD) and its value spans more than medical product development.

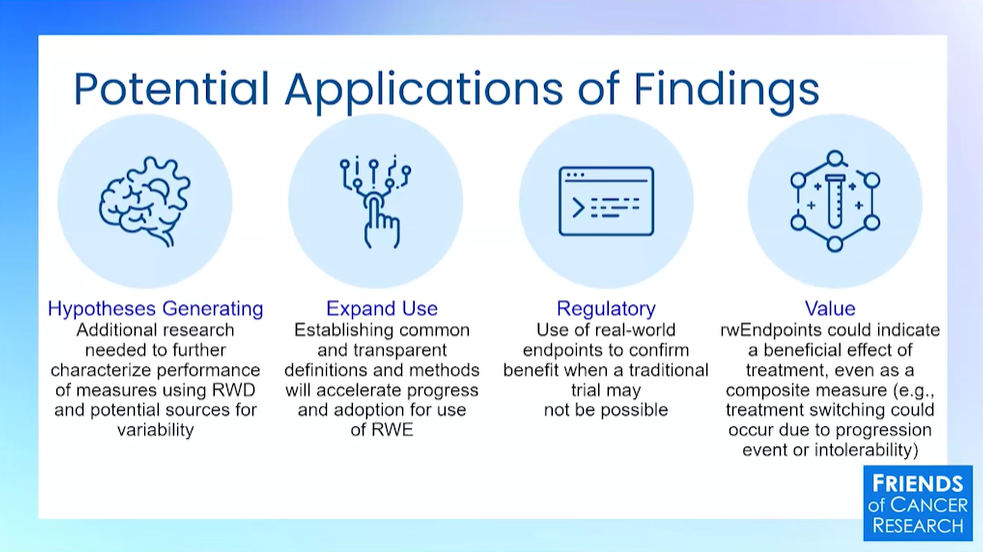

There was a lot of good discussion in our first panel of the day about the applications of RWD beyond regulatory engagements. I like the slide shown by Jeff Allen (below) from Friends of Cancer Research with a concise overview of categories.

But if I had to sum up one overarching message from the first set of talks, it would be that thinking of safety monitoring as RWD’s highest and best use is myopic. Label expansions, shared control arms, early phase studies, and more are on the table now. But we spend an awful lot of clinicians’ time collecting data and it should also help them make clinical decisions about standards of care and evolving practices with patients in the… wait for it… real world.

• Work must be done to unify data longitudinally across systems, research, trials, and RWD.

Here I will shamelessly plug my own slides and discussion. The TL;DR is that a typical electronic health record comes from a tiny snapshot in time from the entire patient journey, and that has limited usefulness. Interoperability means that we could connect as the patient moves from one hospital to another or from one hospital to a pharmacy. But if we want a truly informative view of diseases’ natural history and patient outcomes over the longer term, we need to connect across time with other types of data, so that patients in studies are followed through RWD and patients in trials have RWD to describe what happened before the treatment in question.

• Data collection is important, and natural language processing cannot find something that was not entered in notes.

Dr. Laura Esserman drove home the point that data collection needs to be standardized, and I couldn’t agree more. I love her for self-identifying as ‘rationally deluded’ about the future. Physician notes are commonly needed for analyses that require patients with a certain condition or diagnosis, but they come with challenges. Notes can never meet the legal definition of de-identified according to HIPAA and some interpretations of GDPR, for example, because there is no limit to what a physician might add to notes and no way for a computer to scrub it all out. That means sharing data from physician notes can be challenging or even impossible in some circumstances.

Moreover, I hear lots of hype about AI tools making data entry practices a non-issue, but no tool can identify a condition that wasn’t mentioned in text. Laura Fernandez from COTA Healthcare made some great points about analyzing data and sharing it securely. Her team recently published a blog that discusses the current status of AI in the RWD setting, and it reinforces the point that data collection matters.

Thankfully, Molly Prieto from USCDI+ at the FDA’s Oncology Center of Excellence discussed standards for data collection during the third panel of the day. I had a chance to discuss this more on a break with Shannon Silkenson from National Cancer Institute, and I was really energized to hear about these efforts to define a minimum set of data elements that should be collected for oncology use cases. I will definitely keep this working group’s activities in my field of view.

• The features of RWD that elevate it to RWE should also be required for using RWD to treat patients!

In the closing panel, really appreciated Dr. Paul G. Kluetz from the FDA’s Oncology Center of Excellence when he boldly stated, “If we’re going to make a clinical decision [based] on this evidence, it has to be high-quality. And I don’t want to see different tiers of evidence to make the most, to me, critically important decisions for patients.”

Broadly, the FDA has defined that for RWD to be used as evidence in a regulatory decision-making process, it must have good provenance with an audit trail of data sources, manipulation, and analyses. RWD must also be fit for the regulatory purpose and analyses used in evidence should account for inherent biases outside of a randomized controlled trial. As a patient, I’d also like the same rigor, please and thank you.

Even in prestigious academic centers, there is opportunity for published studies to include questionable data practices. Whether through inexperience, incompetence, or something more nefarious, it is possible to go wrong when deriving RWD from electronic health records or at intermediate steps. I’d like to know that knowledge about clinical practice is of the same quality and provenance that would be required for regulatory submission.

Aridhia’s Digital Research Environment (DRE) makes it easy to collaborate on RWD and elevate it to the standard of RWE. FHIR API endpoints make electronic health records accessible in a standardized way, and the security certifications for the DRE reduce risk, while pseudonymization tools and synthetic data provide options to take a step further. All data collaborations on analysis happen in an audited, secure virtual environment, and mathematical models can be transparently shared for editorial review. To me, there’s no excuse for skipping the rigor for any purpose, even if the data are from the messy ‘real’ world.

March 26, 2024

Amanda Borens

Amanda (M.S.) joined Aridhia in 2022 and serves as Chief Data Officer. She is a data science leader with 25+ years’ experience across public and private sectors, including academia, healthcare, and biotech. Amanda has a unique breadth of life science data curation and analysis experience, having led technical teams in all phases of the FDA-regulated medical software development lifecycle for customers on three continents as well as in medical device development where her team achieved FDA clearance and CE Mark from EMA. This breadth of experience helps her provide innovative data solutions to solve scientific problems while respecting global regulatory requirements.