Blogs & News

Working with LLMs Securely and Safely

AI and Healthcare Data

In Amanda’s recent blog she discussed what she’s hearing in the marketplace with regards to the applications of AI in healthcare, particularly in bioinformatics and life sciences. In drug discovery, where AI analyses vast datasets, identifying drug candidates and optimising molecular structures or medical imaging where AI algorithms analyse biomedical images, enabling faster and more quantitative comparisons of cell characteristics, and, at the other end of the spectrum, AI can support near real-time clinical decision making, assist clinicians in diagnosing diseases, predicting patient outcomes, and recommending treatment options.

We really are on the cusp of a technological revolution which will have huge ramifications for all our futures.

However, in each of these examples, there is one consistent feature that needs to be considered. The data involved are highly sensitive, often identifiable, sourced from real people. So, while AI offers immense potential, working with sensitive and potentially identifiable data presents real challenges.

Patient privacy must be protected. There must be no possibility of re-identification. Innovation must be balanced with ethical guidelines and there must be full transparency of AI-based decision-making. Data must be protected from unauthorised access and prevention of exfiltration and breaches require robust security measures.

Training an AI model requires data. They have an insatiable appetite for data. Learning from those data to become more accurate, reduce bias and remove the likelihood of hallucinations. However, much healthcare data is simply not suitable for use in training such models. The risk of re-identification is too great, the systems and data management too opaque. Other technologies or techniques are required.

LLMs and the Security Dilemma

Large language models (LLMs) are powerful tools for natural language processing, but they also pose ethical and legal challenges when trained on sensitive medical data.

The data security dilemma with LLMs is that they may expose or leak sensitive or confidential information that they have learned from the data they are trained on, and once trained – an LLM will not forget what it has learned.

LLMs may then inadvertently leak or reveal personal or confidential information that they have learned from the data, such as names, diagnoses, or treatments. This can violate the privacy and consent of the data subjects, as well as the regulations and policies that govern the use and sharing of medical data.

One potential solution is to use an offline LLM that is not connected to any external network. Offline LLMs can be trained locally, with no interaction with an external network. These still require careful data pre-processing, anonymisation and, importantly, balancing data representativeness and diversity to avoid bias in any results.

There are many offline models: many are open and freely available – but to use effectively require significant compute resources. Meta’s pre-trained llama-2 model can be run offline and comes in 3 sizes; 7B, 13B and 70B parameters. Even at the ‘smallest’ 7B parameter scale, it requires significant GPU, CPU and RAM capabilities – often beyond the specifications of a typical desktop or laptop. As a result, researchers need access to a platform that can run an offline model, with adequate compute, with the security and governance controls in place as expected when working with sensitive healthcare data.

The other alternative is to use a dedicated service such as Azure OpenAI. It does not use your data to improve or train other models, unless you choose to use it to train customised AI models. This can be a more cost-effective alternative to deploying and running an offline LLM. However, full audit of the use of this service and service access governance and control are important to ensure prevent misuse.

The benefit of the pre-trained model is that it comes with vast levels of information, with some models specially trained to favour certain areas. Meditron, a model trained on healthcare papers and publications or the recently announced, Mistral Large, that excels at code generation with structured JSON output for machine readability.

However, use of LLMs in healthcare applications is very much experimental. Diagnosis or drug dosing recommendations will require human input and validation. There remain real questions around reproducibility and accuracy of code and query generation. When analysing a large data set using such models, how can you be sure the answer is correct? You can’t argue with code. There may be bugs in the code, but it is absolutely specific and not open to interpretation. However, there’s currently no way to know the reasoning and decisions an AI model has made to come to a conclusion. At this stage, our customers and partners are experimenting with these technologies, in safe and secure Aridhia DRE workspaces, comparing results from various models and comparisons with other technologies, assured that the data cannot leave their environment or be used to help train open models.

However, use of LLMs in healthcare applications is very much experimental. Diagnosis or drug dosing recommendations will require human input and validation. There remain real questions around reproducibility and accuracy of code and query generation. When analysing a large data set using such models, how can you be sure the answer is correct? You can’t argue with code. There may be bugs in the code, but it is absolutely specific and not open to interpretation. However, there’s currently no way to know the reasoning and decisions an AI model has made to come to a conclusion. At this stage, our customers and partners are experimenting with these technologies, in safe and secure Aridhia DRE workspaces, comparing results from various models and comparisons with other technologies, assured that the data cannot leave their environment or be used to help train open models.

In both of these options, either the offline LLM or use of Azure OpenAI services, the Aridhia DRE is the perfect solution.

The secure Aridhia DRE collaborative workspaces provide the necessary security controls and governance expected. These include:

• Data governance and privacy: The Aridhia DRE ensures that data is securely stored and accessed only by authorised users for specific and ethical purposes. It also complies with various data protection regulations, such as GDPR and HIPAA. Data cannot enter or leave a workspace without express approval.

• Collaboration and knowledge sharing: The Aridhia DRE facilitates collaboration and knowledge sharing among researchers, clinicians, and other stakeholders. It allows users to create and join private workspaces, share data and code, and publish and reuse research outputs.

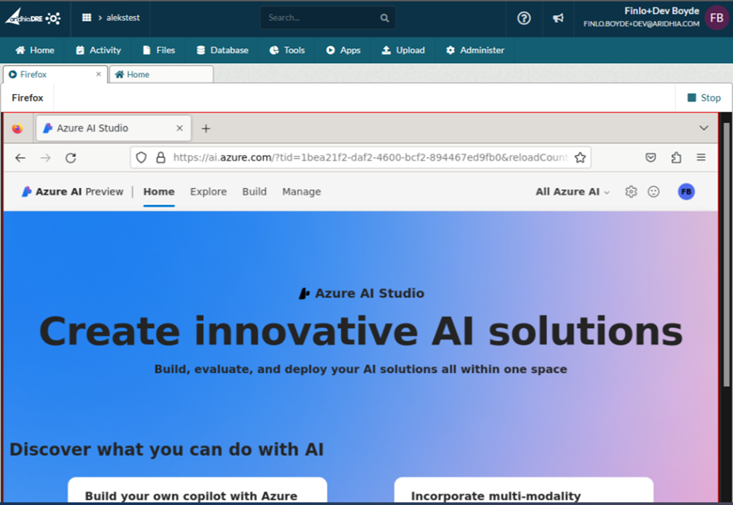

• Analytics and machine learning: The Aridhia DRE provides access to a range of analytics and machine learning tools, such as access to Azure ML, and Azure Open AI. It also offers scalable infrastructure, providing access to virtual machines with GPU acceleration. Ideally suited to large scale offline LLM experimentation.

Figure 1. Azure AI Studio from inside a workspace using a hosted browser, without the need for a Virtual Machine.

Real Possibilities, Available Now

Access to LLMs within the secure confines of a workspace provides capabilities to both researchers and clinicians. EHRs contain rich and longitudinal information about patients’ demographics, diagnoses, treatments, outcomes, and other clinical data. LLMs can be used to enhance EHRs by performing tasks such as medical transcription, clinical decision support, predictive health outcomes, and personalised treatment plans.

Code copilots can be used to generate Python or R code for analysis of structured data. Data can be provided to the offline LLM and questions asked of that data. Experimentation is taking place to compare the output of a LLM natural language query with code and SQL based approaches.

R Shiny applications can be developed by coders to enable access to LLMs trained on a large cohorts of healthcare data that the workspace has been granted access to, in order to provide a ‘no-code’ natural language query on data.

LLMs can assist in harmonisation or the transformation to a common data model such as OMOP (see last blog from Eoghan), by identifying common data types and assisting in mapping between datasets consistently.

This technology will only improve from here on. We are already interacting daily with AI from predictive text on our smartphones, to Netflix entertainment recommendations, to assistant chatbots, internet search replacements and only a few weeks ago OpenAI revealed Sora, a text to video creation tool. Use of LLMs to query data, assist with trends and diagnosis, help with labeling and classification of medical images and finding trends in vast volumes of streaming data from wearables will accelerate positive outcomes for all, and as the biotech and AI technological fields combine, the calls for regulation, control, governance, audit and containment of these technologies within secure spaces will only grow louder.

February 29, 2024

Scott Russell

Scott joined Aridhia in March 2022 with over 25 years’ experience in software development within small start-ups and large global enterprises. Prior to Aridhia, Scott was Head of Product at Sumerian, a data analytics organisation acquired by ITRS in 2018. As CPO, he is responsible for product capabilities and roadmap, ensuring alignment with customer needs and expectations.